Blog

24th May 2025 - - 0 comments

In the ever-evolving world of networking, Ethernet cabling standards have come a long way. From humble beginnings of Cat 5 to the latest Cat 8, each new Category promises faster speeds and better shielding. However, along this evolutionary path, Cat 7 emerged, boasting of superior shielding with speeds up to 10 Gbps over 100 metres.

What happened to Cat 7?

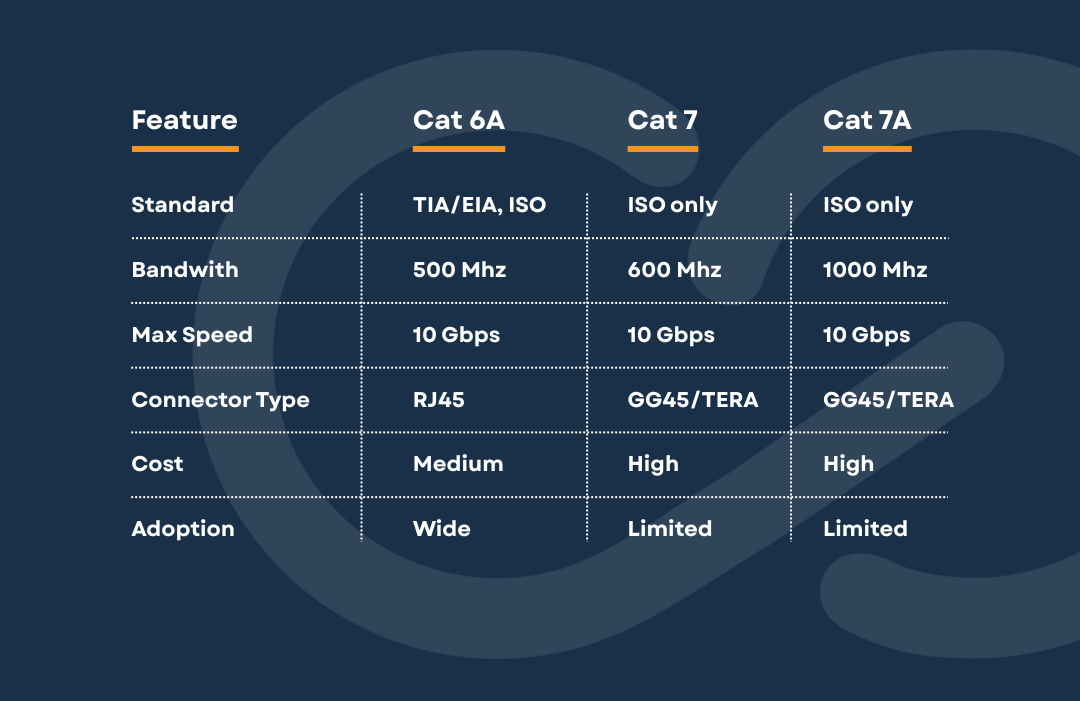

At a glance, Cat 7 seemed like the obvious upgrade due to it having improved shielding, higher frequency of 600Mhz and future-ready bandwidth. It became particularly attractive in environments with a lot of interference: industrial settings, data centres, or high-density networks.

So... where did it go wrong?

- Cable Contradiction - Cat 7 was introduced in 2002, before 10GBASE-T was officially standardized by the IEEE in 2006, Without an established protocol, Cat 7 lacked a concrete benchmark, resulting in a contradiction between marketing claims and actual, standardized network capability at the time.

- Lack of Recognition - It was never officially recognized by ANSI/TIA. This meant it didn't meet the same recognition or industry acceptance as other Categories such as, Cat 5E, Cat 6, or Cat 6A. It remained mostly a European ISO/IEC standard (11801), limiting its global reach.

- Connector Incompatibility - Instead of the industry standard RJ45 connector, Cat 7 used GG45 or TERA connectors, making it incompatible with most existing network hardware. This lack of backward compatibility discouraged adoption, especially when more practical, RJ45-based options like Cat 6A offered similar performance at lower cost.

- High Cost - Its advanced shielding and specialised connectors made Cat 7 more expensive to produce and install compared to Cat 6A, which offered similar performance at lower costs.

Skipped in Favour of Cat 6A

Add a comment:

Get in Touch

Want to know what we can do for you? Please use this form to contact our team.